I am a software engineering professional who works a lot with the family of technologies commonly referred to as “AI”._ This document does not represent the views of my employer. These are my personal views and ramblings._

I have been working with these systems professionally and academically for over a decade. I hold a PhD in which I used a subset of these technologies to build statistical links and models that I still consider to be interesting and useful to society.

I am interested in a subset of “AI” technologies as a hobby. I write about them and build projects with them too.

Here I lay out how I think about, talk about and use AI and what I think are and are not acceptable uses.

AI Disambiguation

At time of writing, when most people say AI they are talking specifically about systems powered by Large Language Model. The term Artificial Intelligence has long been a marketing term. A bogeyman that shifts in meaning based on trends in computing. To discuss these technologies, we need to pin them down and be specific.

When I started my studies, AI typically meant a combination of technologies that included Machine Learning and rule/logic based systems that behave ‘intelligently’.

Principles

Here are some principles that I will generally stand by when it comes to the development and application of AI (in the broadest sense) and technology.

Everything is nuanced

Before I make further, broad sweeping generalisations, let me make a meta-generalisation about the fact that everything is multi-faceted and nuanced and it’s impossible to draw boundaries circles around the things that I discuss here. Everything I say is more of a rule of thumb than an absolute.

Everything is political

It’s important to be mindful about how we deploy use technology, who it empowers and who it impedes.

No-one is Perfect

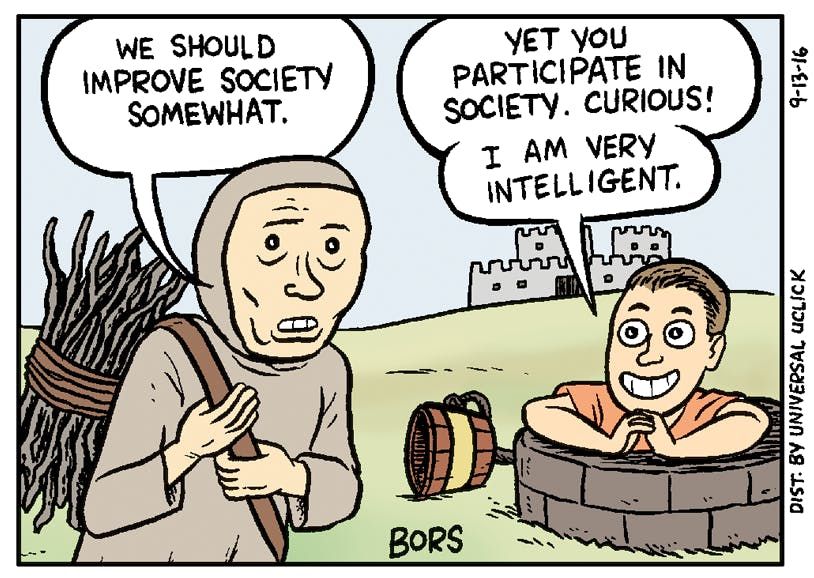

Humans are fallible and regularly make mistakes. We absolutely must avoid no-true-scotsman-infighting if we are going to improve society. It is possible to disapprove of the worst aspects of society whilst still participating in it.

Technology should empower people

I don’t mean this in an SVL tech bro way. I mean this sincerely:

-

We should never force people to become a Blame Fuse, i.e. a necessary human scapegoat in a mostly-automated-but-somewhat-problematic workflow.

-

We should avoid use cases that involve making automated decisions based on false dichotomies within our society.

-

We should avoid exacerbating the already-present dystopian panopticon by collecting too much data and aggregating it centrally.

Where I use and don’t use LLMs

Let’s get into the meat of this since it’s the thing most people reading this page probably care about.

Speech to Text

I regularly use STT To help me write my blog posts and organise my thoughts using notes. I love STT and I think it is revolutionary for a large number of use cases. Even modern voice input STT models like Whisper are not 100% accurate. They will often make mistakes or mishear you, just like a human transcriber might do. When I’m working with these systems, I’ll typically dictate a sentence or two and then look at the output to make sure that it matches what I actually said.

Generally, these models are pretty good if you’re in a quiet room with limited background noise and you’re speaking clearly and enunciating properly. If you’re using rare words or made up words, the system might miss what you’ve said. I also find that it’s best to say each letter of an acronym out loud rather than trying to say the acronym.

Assuming these systems are perfect and using them in medical settings or other important settings to save time is inappropriate and wrong, especially when the doctor or the practitioner is then held accountable for errors that the model made later on.