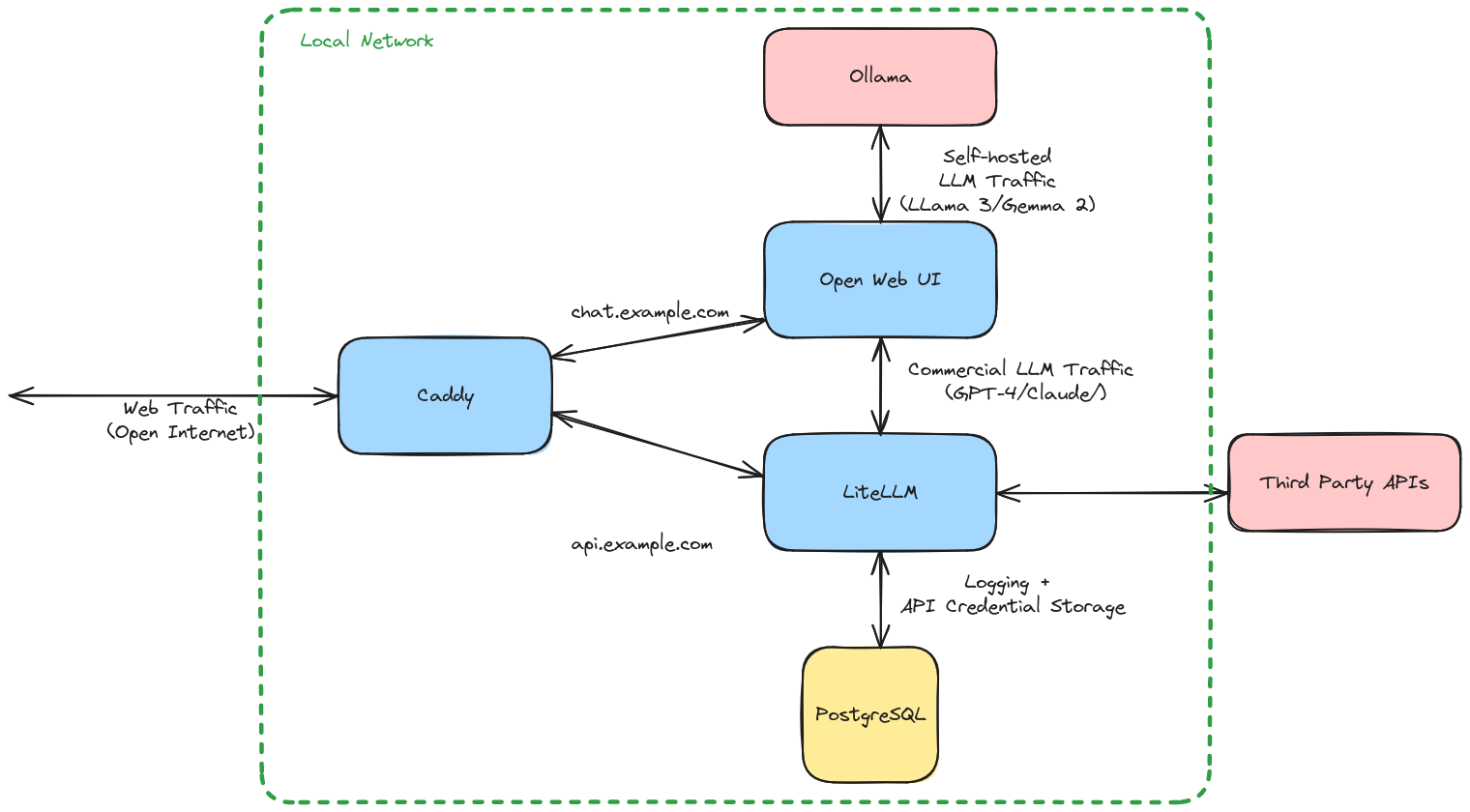

This guide shows a complete setup for running Open Web UI on your own server with the ability to call Local LLMs and commercial LLMs like GPT-4, Anthropic Claude, Google Gemini and Groq LLama3.

Overall Setup

- We will use Open Web UI as the user interface for talking to the models.

- We will use ollama for running local models. NB: This is optional, the setup works with just third party apis.

- We will use LiteLLM for calling out to remote models and tracking usage/billing

- LiteLLM uses PostgreSQL for storing data relating to keywords, API calls + logs etc

- We use Caddy to provide a reverse proxy so that we can use the chat ui over the open internet.

Docker Compose

Open Web UI

We will stand up Open Web UI and make sure it has somewhere to store its data and a TCP port so that we can access it over the network:

ui:

image: ghcr.io/open-webui/open-webui:main

restart: always

ports:

- 8080:8080

volumes:

- ./open-webui:/app/backend/data

environment:

- "ENABLE_SIGNUP=false"

- "OLLAMA_BASE_URL=http://ollama:11434"

(Optional) Ollama

If we are using Ollama then we define the service and also provision any GPU resources (if available):

ollama:

image: ollama/ollama

restart: always

environment:

- OLLAMA_MAX_LOADED_MODELS=2

- OLLAMA_FLASH_ATTENTION=true

- OLLAMA_KEEP_ALIVE=-1

ports:

- 11434:11434

volumes:

- ./ollama:/root/.ollama

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [gpu]

Inbound HTTP Traffic

Let’s imagine we want to run a instance running inside the same docker-compose network as our UI and litellm instances.

We have set up two subdomains:

chat.example.comwhich will serve Open Web UI requestsapi.example.comthat will serve requests to the AI APIS and also provide an admin interface.

caddy:

image: caddy:2.7

restart: unless-stopped

ports:

- "80:80"

- "443:443"

- "443:443/udp"

volumes:

- ./caddy/Caddyfile:/etc/caddy/Caddyfile

- ./caddy/data:/data

- ./caddy/config:/configWe also need to create our caddy config file ./caddy/Caddyfile that tells the reverse proxy where to send inbound traffic.

chat.example.com {

reverse_proxy ui:3000

}

api.example.com {

reverse_proxy litellm:4000

}