Rumours that the famed Transformer architecture that powers GPT, Claude and Llama, won’t keep getting better with more compute (the scaling law) have been around for a while. Even recently, AI companies and pundits have been denying that this is the case, hyping upcoming model releases and raising obscene amounts of money. However, over the last few days leaks and rumours have emerged suggesting that the big silicon valley AI companies are now waking up to the reality that the scaling law might have stopped working.

So what happens next if these rumours are true and where does that leave the AI bubble? How quickly and likely are we to break out of a plateau and get to more intelligent models?

A Brief History of LLMs and How We Got Here

To those unfamiliar with AI and NLP research, ChatGPT might appear to have been an overnight sensation from left field. However, science isn’t a “big bang” that happens in a vaccum. Sudden-seeming breakthroughs are usually the result of many years of incremental research reaching an inflection point. GPT-4o and friends would not be possible without the invention of The Transformer 5 years prior and a number of smaller advancements leading up to the launch of ChatGPT in 2022.

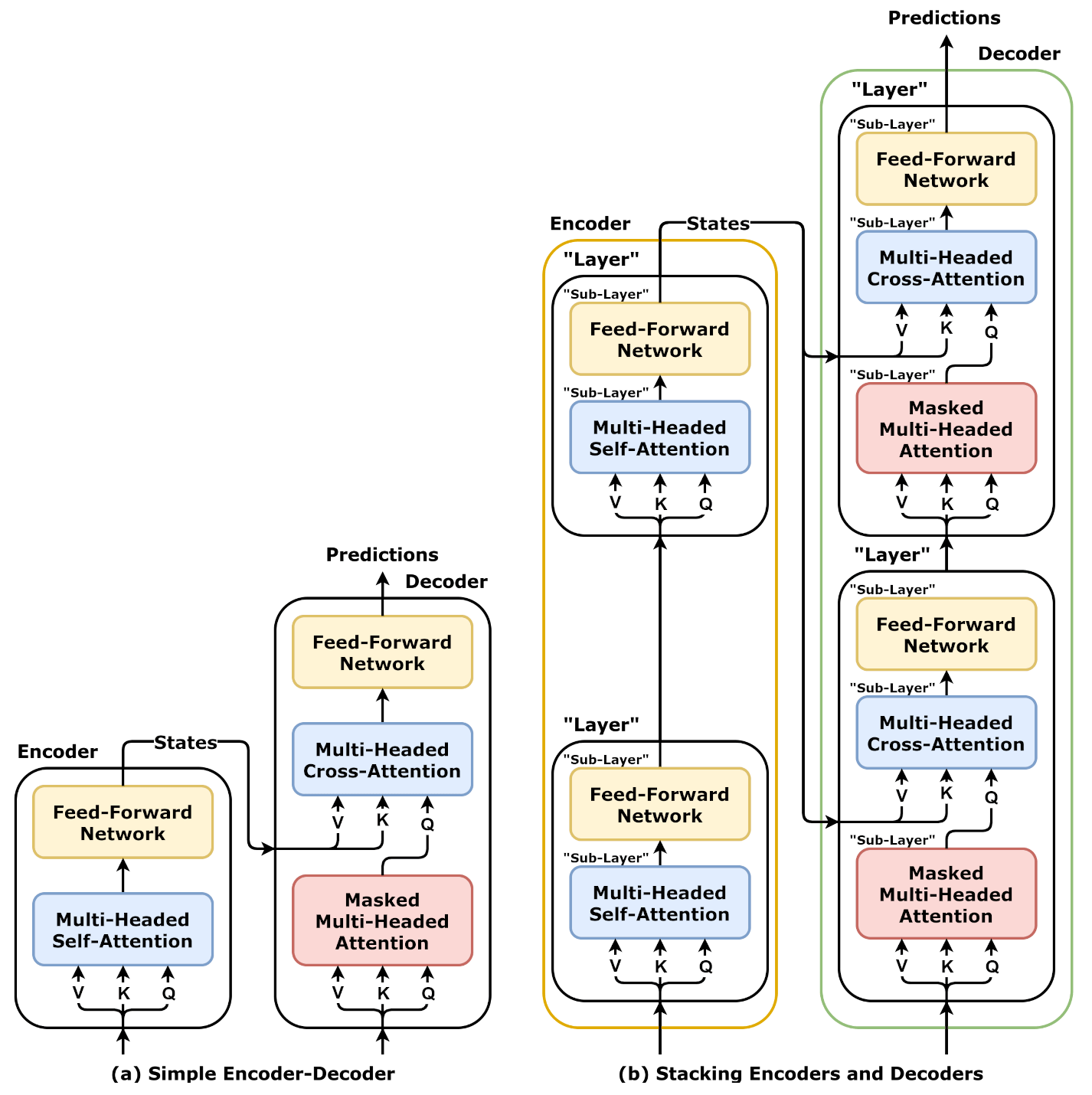

The Transformer is a deep learning neural network algorithm that was invented by Googlers Vaswani et al in 2017. They work by loosely modelling how humans pay attention to contextual clues about which words are more or less important whilst reading. Between 2017 and 2022 lots of incremental improvements were made to the transformer including the ability to generate text based on an input “prompt” ( Radford et al. 2018, Lewis et al 2019 and Raffel et al 2020. ). In 2022, the formulation of Reinforcement Learning Human Feedback (RLHF) by Ouyang et al. at OpenAI made the process of prompting models significantly more intuitive for non-technical users and made user-facing LLM systems like ChatGPT feasible. Of course all of this work can be traced back to earlier breakthroughs in deep learning by people like Hinton, LeCunn and Bengio.

This is all to say that it’s very difficult to predict which incremental advancement will lead to a tipping point that investors think is saleable. If it turns out that we have hit the limits of transformers, we may not see a major breakthrough in AI performance for a long time, leading to a long AI winter. Or, we might find that some new post-transformer architecture emerges from an unknown lab tomorrow and eats OpenAI’s breakfast overnight.

So what happens if that breakthrough comes in another 5 years rather than in the next 6 months?

Challenges for AI Companies Without a Significant Breakthrough

Current Model Capabilities

Current generation frontier models by companies like Google, OpenAI, Anthropic and Mistral are very impressive at some tasks. Extracting information from audio streams or images of hand-written notes, helping software engineers write code more quickly. The kinds of tasks they’re great at are low-risk high-effort grunt work and tasks that can be automatically quality assured (for example generated application code can be unit tested). Even with a non-zero error rate models may be better at dull tasks than a bored human who doesn’t want to be doing them in the first place.

But, let’s face it: the huge amount of money being thrown at LLMs is not to solve the boring tasks but because investors think that it’s possible to significantly reduce costs and “automate the majority of work”. As impressive as current frontier models are, everyone who works with them knows that they are not up to the task of completely automating a large amount of knowledge work (unless you use questionable methods to have the model rate it’s own abilities of course). We’ve seen tonnes of examples of high profile, embarrassing LLM fails in chatbots, search results and even McDonalds recently canned their AI-powered drive through trial.

LLMs are also really good at natural language tasks. That is, tasks that NLP researchers (like myself) have historically found interesting because they unlock other use cases, but which are probably not interesting to end users and consumers. For example, LLMs are great at classifying and categorising text, at extracting names of companies; people and places (NER), at determining whether two sentences imply the same thing or not (NLI) and many others. These use cases can be combined with traditional software engineering to build platforms that do things like make podcasts searchable or make it easy for researchers to find relevant scientific papers. However, selling models to the kinds of people building these systems is a business-to-business play rather than a business-to-consumer play and (as I explain below) it’s a pretty commoditised space to play in too.

Public Perception

Most office workers and knowledge workers are now familiar with LLMs and are using and enjoying them on a regular basis and finding them genuinely useful. However, the general population is a little more wary. A UK Government Survey conducted earlier this year found that only 44% of adults surveyed agreed that AI will benefit society and 20% said disagreed with this statement. Interviewees cited concerns about personal data usage, fake news and cyber crime.

Creators have also been very vocal about the theft of their intellectual property, from books they’ve written to their web content to their visual and audio likenesses. Many artists are starting to poison images of their works online to prevent them from being used to train AI. Many of these fights have been prominently reported in the public eye and will have influenced end users opinions about the moral and ethical positions of AI companies. AI companies don’t help themselves either, from allegedly stealing Scarlett Johansson’s voice likeness to offering to replace striking workers.

Beyond the politics and the drama, when it comes to getting hands on with AI, the picture is also mixed. Some AI products like Cursor, a tool for writing software using an AI assistant have launched to great fanfare and been very well received. Some AI products like the infamous humane pin struggle to find product-market fit and flop. AI features in established products have seen lukewarm receptions. After a short experimentation window, Discord shut down their AI chatbot at the end of 2023. More recently, it turned out that Microsoft’s Office 365 Copilot Pro subscription is so unpopular that MS are giving up trying to sell it as a standalone product and bundling it into their standard Office subscription along with a mandatory price bump. Additionally, even though ChatGPT reportedly has over 200 million weekly active users, only 11 million (approximately 5%) of them are paying for Pro.

Cost

Frontier models are incredibly expensive to run. It is estimated that OpenAI currently loses 1 they make. Microsoft are thought to be losing $20 per user per month on Github Copilot. There is a race-to-the-bottom for LLM API pricing to encourage businesses to build their businesses using a particular provider’s model. Frontier models have become commoditised but they’re not even profitable yet. Companies like Meta, Microsoft and Mistral have been providing small-but-mighty models that can perform a large number of AI tasks locally for free which significantly undercuts frontier model providers, forcing them to respond in kind by releasing lower cost, less powerful versions of their flagship models. Local models can be fine tuned on specific tasks and for some use cases, they can work just as well as frontier models and eat into AI providers’ higher-end customer base too.

There’s a human cost to these models. Many AI products have been enabled by outsourcing the development of datasets and moderation of incoming requests to workers on poverty wages. These workers are regularly reviewing upsetting and offensive material and receiving limited or no support. Earlier this year it was revealed that Amazon’s “just walk out” AI shop was powered by offshore workers paid £1-2 per hour to watch CCTV footage.

There’s an environmental cost to all of this too. A huge amount of ink has already been spilled about the enormous power draw and burden on local water tables needed to support these models. Large companies with commitments to reducing carbon emissions or becoming carbon neutral have started to move in the wrong direction (although the tech sector is far from the only culprit here ).

Some Dilemmas For AI Companies

-

If AI providers can’t promise groundbreaking new models every 6 months or give a confident timeline for when super intelligent models might emerge, then how can they raise investment from institutional investors that want to automate everything and cut costs with AGI?

-

If end-users’ and consumers (who are at best neutral on AI) are begrudgingly paying the current loss-making fee or freeloading using free versions of tools, how can AI companies convince them to pay a profitable rate for access to existing models? Is the proportion of users who are so happy they’d pay 2-3x more big enough to keep these companies afloat?

-

Likewise if commercial consumers of AI APIs are struggling to sell their AI features at the subsidised API price, how are AI companies going to convince them to pay for that same service at a break-even or profitable rate?

-

How does the political climate in the US affect pricing pressure (tariffs on imported GPUs leading to higher CAPEX when building data centres leading to higher “break even” point for AI API providers)?

It looks like AI companies that don’t pivot or diversify might be in a bit of a tight spot in the short term. However, many of these challenges are solvable. It is worth exploring some possible futures and solutions to some of these issues.

Possible Solutions to Challenges

The Miracle That Could Save The AI Companies

The miracle that AI companies are praying for would be a sudden breakthrough stemming from experimental “post transformer” model architectures. . Approaches like Mamba or RWKV or one of their descendants could lead to the continuation of scaling laws which would mean “business as usual” for AI firms.

On the other hand, if a new model architecture doesn’t emerge quickly, AI companies will need to focus on cutting costs and driving up prices of their offerings to make themselves profitable. If they can’t continue to generate record breaking valuations and speculative investment, their record-breaking losses will surely mean that their days are numbered.

Cutting Cost with Miniaturisation and Quantisation and Decentralisation

One possible avenue for exploration is quantisation and model miniaturisation. Through projects like llama.cpp we’ve already seen that it’s possible to run models with many billions of parameters (the ‘neurons’ in the neural network) on a laptop or even a mobile phone. This is possible through the compression of the model parameters to take up significantly less memory, throwing out some information but preserving enough that the model still works a bit like how JPEG image files work. Recently researchers at Microsoft have started to explore whether it’s possible to represent neural network parameters in 1 bit, that is, the smallest possible unit of information. Other researchers found that it might be possible to just throw out a large number of parameters all together. Assuming the performance losses are acceptable or minimal, quantizing flagship models could potentially allow AI companies to serve their APIs at a much lower cost and use less electricity and water cooling. I suspect all of the big providers are already following this particular research thread very closely.

Recent work by Akyürek et al 2024. has shown that state of the art performance can be obtained using small models. Their approach, Test-Time-Tuning, involves slightly tweaking the model’s parameters, normally frozen after training, at runtime. It’s similar to giving examples in your prompt but instead of giving the model something additional to read, we give its brain cells a quick and targeted jolt to focus it on the task at hand. It’s still an emerging technique and we’ve got a lot to learn about how it could be applied in practice and at scale. However, it could potentially accelerate the miniaturisation of LLMs quite significantly.

A complementary approach would be to move to dedicated hardware. GPUs are typically used for graphics processing (that’s where the G comes from) and although there’s a fair amount of mathematical crossover with deep learning, they are not specifically optimised for AI workloads. Some companies like groq are already producing custom chips that are optimised for AI model inference at increased efficiency.

It’s possible (although perhaps less likely) that AI becomes much more de-centralised. New computers and phones now ship with AI-specific chips and both Apple and Microsoft have made headlines recently with their (somewhat different) approaches to local AI. Perhaps OpenAI will end up licensing a miniaturised version of GPT-4 to hardware vendors who will ship it as part of your operating system. That would certainly cut (or perhaps externalise) running costs both fiscal and environmental. Historically vendors have claimed that they’re keeping these models away from end users for safety reasons. On the other hand, assuming no breakthrough, end users already have access to tools like StableDiffusion and Llama 3 and they can already generate fake news and fake images of politicians. Running models locally also makes it difficult for AI companies to capture data for further training.

If Trump’s tariffs come to pass then the cost of running models either in US data centres or on US consumers’ devices goes up. For AI companies this incentivises them to either offshore inference capabilities outside of the US (since services are not subject to tariffs ) or pursue the decentralised “run on your device” model explored above. Of course, end consumers will also have to deal with a price rise if they want that new phone or laptop which could put AI-capable devices, and thus, AI companies’ models, out of reach of more US customers.

Silicon Valley Pivots away from the ‘AI’ Brand

It’s also possible that dedicated AI companies like OpenAI, Anthropic and Mistral will go the way of the dodo, their offerings shuttered and their IP auctioned off to the FAANGs of this world. Make no mistake, LLMs will not go anywhere. The companies that buy up the IP could find ways to run LLMs at a low cost and have them run on your device (like Apple are starting to do and Microsoft are experimenting with). In this scenario, the consumer-facing LLMs just become a value-add feature for whatever the company is selling: their devices, their operating system or their social media platform.

When GPT-4 first launched and the assumption was that scaling laws would hold and GPT-5 would be exponentially better, it was a commonly held belief that OpenAI’s dominance of the LLM space was unassailable. However, another interesting side effect of “no scaling law and no big breakthrough” is that it calls into question the unassailable position of big AI companies. We’re already seeing “super mini” LLMs with 8 billion parameters that can compete with GPT-4 at specific tasks, in some cases across many different benchmarks. If ‘Open Source’ models can operate in the same ballpark as GPT-4 then there’s less of a reason for B2B customers to stick with OpenAI.

All of this said, running LLMs at scale across clusters of GPUs is not a trivial activity that a 4-person startup is going to want to try to take on. I envisage further commoditisation of the LLM API world with more services like groq and fireworks offering cheap-as-chips LLM inference using “good enough” models that OpenAI can’t profitably compete with. Firms that are big enough might decide to build an internal LLMOps team and manage this stuff themselves as they scale up.

The PR Issue: Winning People Over

I live almost exclusively in an algorithmic filter bubble so its entirely possible that people care a lot less about the external costs of AI than I do. However, it does seem to be the case that the general public is unsure about AI, associating it with words like “scary” and “worry”. 65% of respondents to a recent UK survey recalled news stories representing AI in a negative light. Given the seemingly endless stream of high profile AI fails and the amount of hype and corresponding grift in the space, it’s understandable why.

While the scaling law held, big tech leaders have asked for record-breaking investment in AI to help us unlock super intelligent AI and use it to solve societal problems, climate goals be damned. However, in lieu of an all-powerful GPT-5, its a little easier for investors and the general public to take these claims with a pinch of salt. Against the backdrop of user-hostile increases to [subscription fees](https://www.forbes.com/sites/tonifitzgerald/2024/08/14/rising-streaming-subscription-prices-how-high-is-too-high-for-netflix-disney-and-more/, new limitations and more ads across the tech industry, AI companies will have to tread carefully. They will have to be quicker to demonstrate the value of what they’re building right now and dial back the futuristic rhetoric. They will have to show their distrustful customer-base that they are committed to climate goals. They will need to take practical steps towards more transparent and equitable data collection. They’ll have to make sure that offshore workers are paid a fair wage and that they’re not ruining anyone’s mental health by having them look at the worst of humanity all day.

Whilst many of these commitments and changes are going to require firms to spend more money rather than less, the wheels seem to have come off the “blind faith in the future” option. That means leaders are faced with making amends with the public and making the value that they offer much clearer if they want to win enough business to survive.

Conclusion

Trying to predict the future is hard and as we’ve seen, science moves in unpredictable fits and spurts rather than predictable incremental deliverables. With an increasingly skeptical investor-base and customer-base and seemingly limited prospects of a “miracle breakthrough”, AI companies are in a tough spot and they’ve got some choices to make.

Although the train might be leaving the AI hype station for now, LLMs are here to stay and researchers will continue to make incremental progress towards their next goals and towards AGI. We might see another AI winter while we wait for the next big breakthrough to happen but we will also see some genuinely useful new software emerge from the 2022-2024 boom.

Alternatively, it might be the case that as I write this article and prepare to hit “publish”, a team of researchers at Google are finishing up their paper on a new model that can trounce GPT-4 and run on a pocket calculator. We certainly live in interesting times!